The past few months I've been working on a little rendering tech demo in my free time and it's my pleasure to finally be able to release it. You can check it out by downloading the demo here: Broken Marble. In this post I'll dig a little into what went into the demo.

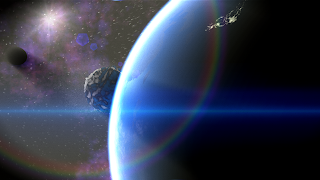

In addition to being a great little learning experience it's been a nice way for me to keep up my graphics programming chops. Originally it started out as a small GPU Raytracing experiment and ended up including a lot of interesting rendering features. The demo is about a minute and a half long and the soundtrack is an edited version of one of my favorite songs from the freely available Bulletstorm soundtrack ("This one last time"). In essence the high-concept is an asteroid impacting with the Earth (being the Blue Marble, as hinted at by the name).

In addition to being a great little learning experience it's been a nice way for me to keep up my graphics programming chops. Originally it started out as a small GPU Raytracing experiment and ended up including a lot of interesting rendering features. The demo is about a minute and a half long and the soundtrack is an edited version of one of my favorite songs from the freely available Bulletstorm soundtrack ("This one last time"). In essence the high-concept is an asteroid impacting with the Earth (being the Blue Marble, as hinted at by the name).

To take this from concept to reality I made a simple storyboard with the camera shots that I wanted to capture coinciding with the effects I wanted to show off. I then keyframed a rough mock-up which I iterated on and refined over time. I derived a lot of inspiration from the Genesis Effect in Star Trek 2 (a childhood favorite of mine) as well as this video. The destruction effect was obviously easier to do than terraforming though I may try to do a real-time demo of the Genesis Effect as well one day.

I started this demo around last November and worked on it on and off with a few stretchs of no work being done at all. It was probably about 40 or 50 hours of work total, which actually seems like a lot but keep in mind I was implementing everything from scratch and most of it was pure learning by trial-and-error. Feature creep was something I tried to avoid since "real artists ship" but to be honest 90% of the features were feature creep brought on by curiosity and the natural needs of the project (in the case of the tools I wrote).

Features I implemented for this demo are:

* Planet Raytracing with realistic (physically based) Atmosphere Lighting

* (Procedural) Planet Destruction/Disintegration Effect

* Raytraced Displacement Mapping

* Raytraced and Analytic Shadows (AO using a technique similar to how a spotlight works)

* Bokeh Depth-of-Field, both software and hardware implementation (utilizing VTF)

* Advanced particle system with lighting, proper sorting (layered using premultiplied alpha), proper DOF blurring, efficient particle recycling, lower fill (offscreen rendered at lower rez), depth softening (soft particles) and multiple emitter types (point, rectangle, circular, box, sphere)

* Skybox Motion-Blur exploiting a novel approach to reduce the number of required samples by biasing Cubemap Mips per-sample

* Volumetric Lighting

* A number of Post-FX like HDR Glow, Scanlines, DOF, Film Grain, Color-Separation (didn't make it in), Vignette

* Spherically Projected Decals (Asteroid Crater)

* CineScript: General but powerful interpolated keyframe system that can be used to drive camera motion, shader values and any other time specific events that require interpolated values or precise control. Currently supports Catmull-Rom and Linear Interpolation

* Anamorphic Lens flares (done completely on GPU in clip-space, including occlusion check)

* FMOD support

I also implemented a number of tools worth mentioning

* (Software) longitude map to cube map generator (with mipmaps)

* DataLinker: Tool and associated Library that appends files to an executable and creates a file system that can access these files eliminating the need for assets as loose files

* Shortcuts and special commands that aide in creating keyframes and editting the CineScript files

* Debug visualization and performance tracking tools

* Procedural Starfield Cubemap Generator using Perlin Noise (which unfortunately has been lost to the ether)

A few things were close to being done but just didn't get in. I hope to finalize some these features and get them in a later release:

* (Shader Based) Anti-aliasing. Works well with the Raytraced Planet but ran into issues with Atmosphere.

* Particles properly interacting with glow and bokeh.

* Particle animation (via film strip or 3D texture -- more likely film strip since 3D texture mips and filtering aren't handled very well in D3D9)

* Megascreenshot: a tool that allows capturing incredibly high-resolution screenshots (e.g. 10kx10k) for print or desktop backgrounds

* Video Capture

* Stereoscopic rendering, both real and virtual (similar to the technique that Sony and Crytek have shown), including anaglyph for debugging.

I decided to do this app using D3D 9.0c because of my strong familiarity with the API but I plan to port it to D3D 11 as soon as I get some new hardware. Most of the features weren't too difficult to implement using Shader Model 3 although I definitely ran into a few limits. I also ran into a few bugs and had a LOT of problems with the HLSL/FX compiler which were mostly resolved with work-arounds. Because of this choice porting to the Xbox360 or PS3 would be trivial though a LOT of work would need to be done to get some reasonable performance (I could see 30 FPS being within the realm of possibility.

There are likely a few bugs I haven't found yet dependent on different computer configurations but the biggest issue is actually one with the CineScript system. I decided to use absolute times for the keyframes so when you plop one down it's time looks like hh:mm:ss:fff. Despite the fact that I use an update thread that maintains a locked time-step, it's possible for a specific keyframe to come slightly after it was supposed to since the stepping occurs at a specific rate (30 frames per second) that might not evenly line up with the keyframes. This results in certain keyframes coming in slightly late which only looks bad when the camera moved but a scene aspect was not updated in coordination (since everything is controlled by keyframes). I have some ideas on how to fix this and will release an update as soon as I do.

Another issue is how the bokeh interacts with scene object silhouetes. The way I ended up doing Bokeh is very similar to how Lost Planet did theirs for anyone familiar with the approach. Basically a screen space grid is created with quads for each bokeh circle (or whatever pattern you want). The circles are adjusted on a sub-pixel level so they line up correctly with the brightest pixel which is determined much like normal HDR Glow/Bloom. This works pretty well but because the atmosphere is translucent I ran into issues with DOF/Bokeh that required the atmosphere to write depth. Because of this and because of the intensity of the atmosphere it will sometimes show up as Bokeh when it should _not_. I haven't found an acceptable solution for this yet but I plan to put some more thought into it in the future.

So after all that, I hope you enjoy the demo. If you have any feedback, constructive criticism or just words of support please feel free to share in the comments or shoot me an email. A number of lessons were learned and I'll try to find some time to share them in future posts. In the meantime I'll leave you with some screengrabs.

-Aurelio

5 comments:

The link to the demo is broken.

Of course as soon as I post this it's fixed :)

Wow, excellent, love it.

And the music fits perfectly.

Again, congratulation for this demo. My last criticism: if I were you, I would try to align the origin of the light shaft with what appears to be the sun. But I understand that you may have tweaked it to get the light shafts you desired.

And a question: for the asteroid, are you using ray-traced displacement mapping or just ray-tracing inside a volume?

sebh: for the asteroid I'm using raytraced displacement mapping -- essentially stepping through a spherical volume defined by a cubemap. I considered using an actual 3D texture but this was faster for my purposes.

Post a Comment